Service Testing with Docker Containers

14 Feb 2018During the recent months I've been helping a company improving their automated testing practices. Besides doing coaching on TDD I also had the chance to work on a project consisting of multiple services where I was able to introduce some service tests using Docker. It's the first time I've used Docker on a project for real and I was quite happy how useful it can be for doing service tests in a distributed environment. In this post I will describe a few of the things I did and learnt along the way.

Motivation

It's common wisdom that having tests on the lowest level can be very beneficial, as being symbolized by the test pyramid as well.

- Unit Tests can be executed a lot faster

- it's easier to identify why a test failed

But having unit tests often is not enough. In reality failures often hide in the integration of components, be it technical or in the interaction of the components.

If all your remote calls are mocked you might never notice that you have configured your http client the wrong way. If you never ran your tests on a real database you might never notice that your transaction is never being committed or that the sql you use for migrating the table only works on your in memory database.

One way to solve this is building end to end tests that drive the application through the frontend and execute real user behaviour on the system. This might sound like a good idea at first but often this leads to a very fragile system. Tests might pass on one day and fail on another.

- Some network segment might not be available

- Some other application team might have dropped their database on the staging environment

- a million other things can have happened

But all you can see is that your tests failed and you have to investigate why.

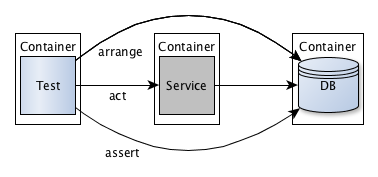

You can have far more reliable tests if you only test some components in isolation. Startup a service with all its required dependencies, execute a request on it and see that the result is as expected, be it a response, an entry in a database, a message on a queue or anything else.

If there are any remote services that are being used by the system you can integrate very simple mock services. After all the intention of those tests is not to test everything that can be tested but only some representative areas.

Docker

Now, how does Docker help with this? It allows you to easily start your components as separate containers that can interact with each other. A container runs one of the components, there is one container for each database, one container for the service, one container for each mock and so on.

Containers are started from images that are specified using a Dockerfile. It can extend an existing image (e.g. a container that provides a java runtime) and add application specific tasks (e.g. runtime flags). An example: the following dockerfile is what is being generated by JHipster for a service.

FROM openjdk:8-jre-alpine

ENV SPRING_OUTPUT_ANSI_ENABLED=ALWAYS \

JHIPSTER_SLEEP=0 \

JAVA_OPTS=""

# add directly the war

ADD *.war /app.war

EXPOSE 8081

CMD echo "The application will start in ${JHIPSTER_SLEEP}s..." && \

sleep ${JHIPSTER_SLEEP} && \

java ${JAVA_OPTS} -Djava.security.egd=file:/dev/./urandom -jar /app.war

It extends a jre image that provides the Java Runtime, adds the war file with the application code and has the command to start it. This Dockerfile can be used to create an image using docker build and it can be run using docker run.

If you have multiple containers that should be run together as it is the case with the tests we are executing here you can use docker compose. You specify the services in a yml file, you can pass in environment variables and many other settings. A simple example for a service that uses PostgreSQL.

version: '2'

services:

my-service:

image: my-service

environment:

- SPRING_DATASOURCE_URL=jdbc:postgresql://postgresql:5432/my-service

- SPRING_DATASOURCE_USERNAME=user

- SPRING_DATASOURCE_PASSWORD=

postgresql:

image: postgres:9.6.2

environment:

- POSTGRES_USER=user

- POSTGRES_PASSWORD=

ports:

- 5432:5432

This is starting two containers: One for the service my-service and one for the database. During execution there will be a dedicated network that allows the hosts for the containers to be resolved by their service name, this is why we can use the url jdbc:postgresql://postgresql:5432/my-service in this example.

Which environments variables are available of course depend on the image you are using. For PostgreSQL you can see that you can define a user and a password.

One thing you want to make sure when working with existing images for databases and other components: Always use a fixed version, don't use latest or you might be in for some surprises when running on different machines.

Writing Tests

What kind of tests you write of course heavily depends on the kind of application you are looking at. For many applications it could mean to send a http request to the service and check afterwards if there is a new entry in the database. Of course there can also be many other results to be checked: A file that is being created, a message that is being sent to a queue, another request that is being sent to another service.

As you don't need to communicate with your service in process there is also no need to use the same technology and even if you do I don't think you should share any code. For example for a Java application that uses Hibernate I wouldn't reuse the entity classes in the tests but use plain JDBC or any other technologies instead. Just implement the parts that you really need to validate the basic functionality.

A Java library that can be quite useful for writing this kind of tests is Awaitility. It implements mechanisms for testing asyncronous interactions, mostly by means of polling. The code for waiting for a condition can look something like this:

await().atMost(5, TimeUnit.SECONDS).until(hasNewEntry());

with hasNewEntry():

private Callable<Boolean> hasNewEntry() {

return () -> jdbcTemplate.queryForObject("select count(*) from my_database_entry", Integer.class) > 0;

}

The easiest way to run the tests is to start a container for them as well, which makes the other services available by their name as hostname in the same network. For Java based tests you can derive your image from the maven image and add your project files to it. When adding the flag --abort-on-container-exit for the docker-compose run you can make sure that all containers are being shut down when one container ends, which most likely will be the test container.

You can add the integrationtests to the docker compose file:

integrationtest:

build: integrationtest

command: ./wait-for-it.sh -t 150 my-service:8081 -- mvn test

volumes:

- $PWD/target/surefire-reports:/app/target/surefire-reports

- ~/.m2/repository:/root/.m2/repository

I am using wait-for-it that can be used to only execute a command when a certain service is available. In this case we are waiting until something is available at my-service:8081 with a timeout of 150 seconds.

The test output directory and the local maven repository are added as volumes, which makes them available in the container filesystem. The repository prevents downloading the artifacts again and again, the test output directory is where the reports are being written.

Once a setup like this is in place people might be keen to write as many tests as possible using this approach, testing different inputs and error conditions. Don't. Just use it what it is for, testing integration of the different technologies. For the business tests go with the smaller integration or unit tests.

When there are other services involved you of course need to make sure that those are at least available. Having very simple dummys that return predefined results can get you a long way already. Again, you can use any technology for this. For http base services tools like Express or Spring MVC can be good choices.

Conclusion

It took me a while to get used to the different concepts in Docker and how to combine everything but it can be a really powerful tool to tests services in isolation.

This approach is a lot better than what I have seen in many companies: Using real services in a dev or staging environment in testing which means that when those go down or have different data than expected your tests will fail. For a more modern approach of testing in production (which I don't have a lot of experience with) have a look at this post by Cindy Sridharan.