Elasticsearch and the Languages of Singapore

04 Sep 2017In June I gave a short talk at the first edition of Voxxed Days Singapore on using Elasticsearch to search the different languages of Singapore. This is a transcript of the talk, a video recording is available as well. We'll first look at some details of the data storage in elasticsearch before we see how it can be used to search the four official languages of Singapore.

If you are already familiar with Elasticsearch you can also jump to the section on the different languages directly.

Elasticsearch

Elasticsearch is a distributed search engine, communication, queries and configuration is mainly done using HTTP and JSON. It is written in Java and based on the popular library Lucene. Mostly by means of Lucene Elasticsearch provides support for searching a multitude of natural languages and that is what we are looking at in this article.

Getting started with Elasticsearch is pretty easy. On their website you can download different archives that you can just unpack. They contain scripts that can then be executed, the only prerequesite is a recent version of the Java Virtual Machine.

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.4.1.zip

# zip is for Windows and Linux

unzip elasticsearch-5.4.1.zip

elasticsearch-5.4.1/bin/elasticsearch

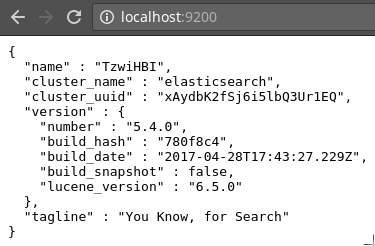

Once started you can directly access the HTTP interface of Elasticsearch on the default port 9200.

Without any configuration you can then start writing data to the search index. I am using curl in the examples but you can use any HTTP client.

curl -XPOST "http://localhost:9200/voxxed/doc" -d '

> {

> "title": "Hello world!",

> "content": "Hello Voxxed Days Singapore!"

> }'

We are posting a simple JSON document that contains two fields: title and content. The url contains two path fragments that describe the index name (voxxed), a logical collection of documents and the type (doc) that determines how the documents are stored internally.

Now that the data is stored we can immediately search it.

curl -XPOST "http://localhost:9200/voxxed/doc/_search" -d '

> {

> "query": {

> "match": {

> "content": "Singapore"

> }

> }

> }'

We are again posting a JSON document, this time appending _search to the url. The body of the request contains a query in a json structure, the so called query dsl. Simply put this searches for all documents in the index that contain the Singapore in the content field.

This returns another json structure that, among other information, contains the document we indexed initially.

{

"took" : 127,

[...]

},

"hits" : {

"total" : 1,

"max_score" : 0.2876821,

"hits" : [

{

"_index" : "voxxed",

"_type" : "doc",

"_id" : "AVwAP4Aw9lCQvRKyIhgJ",

"_score" : 0.2876821,

"_source" : {

"title" : "Hello world!",

"content" : "Hello Voxxed Days Singapore!"

}

}

]

}

}

So how does searching work internally? Elasticsearch provides an inverted index that is used for the lookup of the search terms. For our simple example the inverted index for the content field looks similar to this.

| Term | Doc Id |

|---|---|

| days | 1 |

| hello | 1 |

| singapore | 1 |

| voxxed | 1 |

Each word of our phrase Hello Voxxed Days Singapore! is stored with a pointer to the document it occurs in. Words are extracted, all punctuation is removed and the words are being lowercased. When a search for a term is being executed the same processing is being done and there is a direct lookup of the result in the index.

The process of preparing the content for storage is called analyzing and will be different for different kinds of data and applications. It is encapsulated in an analyzer that processes the incoming text, tokenizes it using a Tokenizer and processes it using optional TokenFilters. By default the Tokenizer splits on word boundaries and there is a filter that lowercases the content.

The analyzing process is also where the language specific processing can happen. There are some prebuilt Analyzers for different languages available that can do different things like character normalization or stemming, which is an algorithmic process that tries to reduce words to their base form. Besides using the analyzers that are shipped with elasticsearch you can also define custom analyzers that use some of the predefined tokenizers and token filter.

Analyzers need to be configured upfront in the mapping before documents are stored in the index. To configure an english analyzer for the content field we can issue the following PUT request.

curl -XPUT "http://localhost:9200/voxxed_en" -d'

{

"mappings": {

"doc": {

"properties": {

"content": {

"type": "text",

"analyzer": "english"

}

}

}

}

}'

There's a new index name but the name of the type is the same. This is a common strategy to handle multilingual content. Have one index per language but with the same structure.

Using this mapping we can now search for the term day instead of days as well. The analyzing does some normalization on the words so that each of them can be found. In general the way a word is stored in the index influences the search quality a lot and is the common way to allow users to search for words in different ways.

Languages of Singapore

There are four official languages in Singapore

- English

- Malay

- Mandarin

- Tamil

We have already seen how we can search for english content using the english analyzer. Let's look at Malay language next.

Malay

Malay is the national language of Singapore, that is why the national anthem is also in Malay. It starts with the following lines

Mari kita rakyat Singapura Sama-sama menuju bahagia

Malay uses the latin alphabet, words are separated by whitespace. We can just index this text as it is.

curl -XPOST "http://localhost:9200/voxxed/doc" -d'

{

"title": "Majulah Singapura",

"content": "Mari kita rakyat Singapura Sama-sama menuju bahagia"

}'

We can immediately search this just like we searched english language text. The standard analyzer prepares the words as they are for the index.

curl -XPOST "http://localhost:9200/voxxed/doc/_search" -d'

{

"query": {

"match": {

"content": "bahagia"

}

}

}'

We are searching for bahagia which means happiness. The document is found as expected.

For malay there is no language specific analyzer available but the standard analyzer works fine. Malay doesn't have a lot of word inflection but has some prefix and suffix rules. What could be possible is to process the text with the Indonesian stemmer that is availabe. Both languages share many rules but there might also be exceptions.

Tamil

Tamil is a bit differnt as it is written using a different script.

ஆறின கஞ்சி பழங் கஞ்சி

This is a proverb saying Cold food is soon old food. Even if you can't read it you can see that the second and fourth word are the same, meaning food.

We can again index this content and then search it.

{

"query": {

"match": {

"content": "கஞ்சி"

}

}

}

There is no special handling for Tamil. The standard tokenizer splits words correctly. It doesn't matter that it's a different script as elasticsearch compares the words on the byte level.

Mandarin

Mandarin is very different from the others as it uses a different script and no white space. My creativity is decreasing so this just means Hello Singapore.

你好新加坡

If you index this using the standard analyzer it will split to single characters. When using this for search this can lead to a lot of false positives. That's why there are alternatives available, most notably the CJKAnalyzer that can work on chinese, japanese and korean language and builds bigrams of the characters.

For the example this leads to

- 你好

- 好新

- 新加

- 加坡

Even this can lead to invalid or irrelevant words but it is better than searching for single characters. When searching for the word Singapore the document is found correctly.

{

"query": {

"match": {

"content": "新加坡"

}

}

}

An alternative can be to use the Smart Chinese Plugin that is, like the name suggest, smarter than just building bigrams. It uses a probabilistic approach to determine sentence and word boundaries.

Conclusion

Each of the languages of Singapore has its specialities. There is basic support for all of them in Elasticsearch but working with multiple languages can be challenging, in real life as well as in search engines.